A common immediate reaction to a high-bandwidth, multi-finger input device is to imagine it as a gestural input device. Those of us in the business of multi-touch interface design are so often confronted with comparisons between our interfaces and the big-screen version of John Underkoffler’s URP: Minority Report.

The comparison is fun, but it certainly creates a challenge – how do we design an interface that is as high-bandwidth as has been promised by John and others, but which users are able to immediately walk up and use? The approach taken by many systems is to try to map its functionality onto the set of gestures a user is likely to find intuitive. Of course, the problem with such an approach is immediately apparent: the complexity and vocabulary of the input language is bounded by your least imaginative user.

In this article, I will describe the underpinnings of the gesture system being developed by our team at Microsoft Surface: the Self Revealing Gesture system. The goals of this system are precisely to address these problems: 1. To make the gestural system natural and intuitive, and 2. To make the language complex and rich.

Two Gesture Types: Manipulation Gestures and System Gestures

The goal of providing natural and intuitive gestures which are simultaneously complex and rich seems to contain an inherent contradiction. How can something complex be intuitive?

To address this problem, the Surface team set out to examine a single issue: which gestures are intuitive to our user population? To address this, a series of simple tasks were described, and the users were asked to perform the gesture that would lean to the desired response. Eg: move this rectangle to the bottom of the display. Reduce the contrast of the display. No gestures were actually enabled in the interface: this was effectively a paper exercise. To determine which gestures occurred naturally, the team recorded the physical movements of the participants, and then compared across a large number of them. The results were striking: there is a clear divide between two types of gestures – what we have come to call system gestures, and manipulation gestures.

Manipulation Gestures are gestures with a two-dimensional spatial movement or change in an object’s properties (eg: move this object to the top of the display, stretch this object to the width of the page). Manipulation gestures were found to be quite natural and intuitive: by and large, every participant performed the same physical action for each of the tasks.

System Gestures are gestures without a two-dimensional spatial movement or change in an object. These include gestures to perform operations such as removing redeye, printing a document, or changing a font. Without any kind of UI to guide the gesture, participants were all over the map in terms of their gestural responses.

The challenge to the designers, therefore, is to utilize spatial manipulation gestures to drive system functions. This will be a cornerstone in developing our Self Revealing Gestures. But – how? To answer this, we turn to an unlikely place: hotkeys.

Lessons from Hotkeys: CTRL vs ALT

Most users never notice that, in Microsoft Windows, there are two completely redundant hotkey languages. These languages can be broadly categorized as the Control and Alt languages (forgive me for not extending Alt to Alternative, but it could lead to too many unintentional puns). It is from comparing and contrasting these two hotkey languages that we draw some of the most important lessons necessary for Self-Revealing Gestures.

Control Hotkeys and the Gulf of Competence

We consider first the more standard hotkey language: the control language. Although the particular hotkeys are not the same on all operating systems, the notion of the control hotkeys is standard across many operating systems: we assign some modifier key (‘function’, ‘control’, ‘apple’, ‘windows’) to putting the rest of the keyboard into a kinaesthetically held mode. The user then presses a second (and possibly third) key to execute a function. For our purposes, what interests us is how a user learns this key combination.

Control hotkeys generally rely on two mechanisms to allow users to learn them. First, the keyboard keys assigned to their functions are often lexically intuitive: ctrl+p = print, ctrl+s = save, and so on:

Figure 1. The Control hotkeys are shown in the File menu in Notepad. Note that the key choices are selected to be ‘intuitive’ (by matching the first letter of the function name).

This mechanism works well for a small number of keys: according to Grossman, users learn hotkeys more quickly if their keys map to the function names. This is roughly parallel to the naïve designer’s notion of gesture mappings: we map the physical action to some property in its function. However, we quickly learn that this approach does not scale: frequently used functions may overlap in their first letter (consider ‘copy’ and ‘cut’). This gives rise to shortcuts such as F3 for ‘find next’:

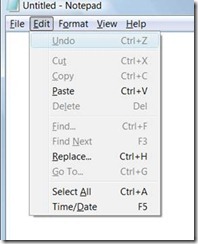

Figure 2. The Control hotkeys are shown in the Edit menu in notepad. The first-letter mapping is lost in favor of physical convenience (CTRL-V for paste) or name collisions (F3 for Find Next – yes F3 is a Control hotkey under my definition).

Because intuitive mappings can take us only so far, we provide a second mechanism for hotkey learning: the functions in the menu system (in the days before the ribbon[1]) are labelled with their Control hotkey invocation. This approach is a reasonable one: we provide users with an in-place help system labelling functions with a more efficient means of executing them. However, a sophisticated designer must ask themselves: what does the transition from novice to expert look like?

In the case of control shortcuts, the novice to expert transition requires a leap on the part of the user: we ask them to first learn the application using the mouse, pointing at menus and selecting functions graphically. To become a power user, they must then make the conscious decision to stop using the menu system, and begin to use hotkeys. At the time that they make this decision, it will at first come at the cost of a loss of efficiency, as they move from being an expert in one system, the mouse-based menus, to being a novice in the hotkey system. I call this cost the Gulf of Competence:

Figure 3. The learning curve of Control hotkeys: the user first learns to use the system with the mouse. They then must consciously decide to stop using the mouse and begin to use shortcut keys. This decision comes at a cost in efficiency as they begin to learn an all-new system. This cost is the ‘Gulf of Competence’.

The gulf of competence is easily anticipated by the user: they may know that hotkeys are more efficient, but they will take time to learn. We are asking a busy user to take the time to learn the interface. The Gulf of Competence is a chasm too far for most users: only a small set ever progress beyond the most basic control hotkeys, forever dooming them to the inefficient world of the WIMP. Thankfully we have a hotkey system that is far easier to learn: the ALT hotkeys.

Alt Hotkeys and the Seamless Novice to Expert Transition

While the Control hotkeys rely on either intuition or users willing to jump the Gulf of Competence, a far more learnable hotkey system exists in parallel to address both of these limitations: the Alt hotkey system. Like any hotkey system, the Alt approach modes the keyboard to provide a hotkey. Unlike the Control keys, however, on-screen graphics guide the user in performing the hotkey:

Figure 4. A novice Alt-hotkey user’s actions are exactly the same as an expert’s: no gulf of incompetence.

On-screen graphics guide the novice user in performing an Alt hotkey operation. Left: the menu system. Center: the user has pressed ‘Alt’. Right: the user has pressed ‘F’ to select the menu.

Because the Alt hotkeys guide the novice user, there is no need for the user to make an input device change: they don’t need to navigate menus first with the mouse, then switch to using the keyboard once they have memorized the hotkeys. Nor do we rely on user intuition to help them to ‘guess’ alt hotkeys.

The Alt-hotkey system is a self-revealing interface. Put another way, the physical actions of the user are the same as the physical actions of an expert user: Alt+f+o. There is no gulf of competence.

Applying Self Revealing Interfaces to Gesture Learning

Our goal on the Microsoft Surface team is to build a self-revealing gesture system that is modeled after the guiding principle of the Alt hotkeys: the physical actions of a novice user are identical to the physical actions of an expert user. Fortunately, we’re not the first ones to try to do this. As I type this, I happen to be visiting family in Toronto, overlooking the offices of the local Autodesk offices – once Alias Research. Many years ago, researchers working at Alias and at the University of Toronto built the first self-revealing gesture system: the marking menu.

Marking Menus: the First Self-Revealing Gestures

Marking ‘menu’ is a bit of misnomer: it’s not actually a menu system at all. In truth, the marking menu is a system for teaching pen gestures. For those not familiar, marking menus are intended to allow users to make gestural ‘marks’ in a pen-based system. The pattern of these marks corresponds to a particular function. For example, the gesture shown below (right) leads to pasting the third clipboard item. The system does not rely at all on making the marks intuitive. Instead, Kurtenbach provided a hierarchical menu system (below, left). Users navigate this menu system by drawing through the selections with the pen. As they become more experienced, users do not rely on visual feedback, and eventually transition to interacting with the system through gestures, and not through the menu.

Figure 5. The marking-menu system (left) teaches users to make pen-based gestures (right)

Just like the Alt-menu system, the physical actions of the novice user are physically identical to those of the expert. There is no Gulf of Competence, because there is no point where the user must change modalities, and throw away all their learnings. So – how can we apply this to multi-touch gestures?

Self-Revealing Multi-Touch Gestures

So – it seems someone else has already done some heavy lifting on the creation of a self-revealing gesture system. Why not use that system and call it a day? Well, if we were willing to have users behave with their fingers the way they do with a pen – we’d be done. But, the promise of multi-touch is more than a single finger drawing single lines on the screen. We must consider: what would a multi-touch self-revealing gesture system look like?

First, a quick gesture on what a ‘gesture’ really is. According to Wu and his co-authors, a gesture consists of 3 stages: Registration, which sets the type of gesture to be performed, Continuation, which adjusts the parameters of the gesture, and Termination, which is when the gesture ends.

Figure 6. The three stages of gestural input, and the physical actions which lead to them on a pen or touch system. OOR is ‘out of range’ of the input device.

In the case of pen marks, registration is the moment the pen hits the tablet, continuation happens as the user makes the marks for the menu, and termination occurs when the user lifts the pen off the tablet. Seems simple enough.

When working with a pen, the registration action is always the same: the pen comes down on the tablet. The marking-menu system kicks in at this point, and guides the user’s continuation of the gesture – and that’s it. The trick in applying this technique to a multi-touch system is that the registration action varies: it’s always the hand coming down on the screen, but the posture of that hand is what registers the gesture. On Microsoft Surface, these postures can be any configuration of the hand: putting a hand down in a Vulcan salute maps to a different function than putting down three fingertips, which is different again from a closed fist. On less-enabled hardware, such as that supported by Win 7 or Windows Mobile 7, this is some combination of the relative position of multiple fingertip positions. None the less, the problem is the same: we now need to provide a self-revealing mechanism to afford a particular initial posture for the gesture, because this initial posture is the registration action which modes the rest of the gesture. Those marking menu guys had it easy, eh?

But wait – it gets even trickier.

In the case of marking menus, there is no need to afford an initial posture, because there is only one of them. On a multi-touch system, we need to tell the user which posture to go into before they touch the screen. Uh oh. With nearly all of the multi-touch hardware we are supporting, the hand is out of range right up until it touches the screen. Ugh.

So, what can be done? We are currently banging away on some designs – but here’s some intuition:

Idea 1: Provide affordances for every possible initial posture, on every possible position on the display. This doesn’t seem very practical, but at least it helps you to understand the scope of the problem: we don’t know which postures to afford, nor do we know where the user is going to touch before it’s too late. So, the whole display is a grid of multi-touch marking menus. Let’s all that plan C.

Idea 2: Utilize the limited, undocumented (ahem) hover capabilities of the Microsoft Surface hardware (aka – cheat ;). With this, we know where the user’s hand is before they touch. We still display all the postures, but at least we know where to do it.

Idea 3: Change the registration action. If you found yourself asking a few paragraphs ago, ‘why does gesture registration have to happen at the moment the user first touches the screen?’, you’ve won… well, nothing. But you should come talk to me about an open position on my team. So, the intuition here is: let the user touch the screen to tell us where it is they want to perform the gesture. Next, show on-screen affordances for the available postures and their functions, and allow the user to register the gesture with a second posture, in approximately the same place as the first. From there, have the users perform manipulation gestures with the on-screen graphics, since, as we learned oh so many paragraphs ago: manipulation gestures are the only ones that users can learn to use quickly, and are the only ones that we have found to be truly ‘natural’.

Our work isn’t far enough along yet to share broadly, but watch this space soon. Soon enough – we hope to have a fully functioning, self-revealing multi-touch gesture system ready to share.

Our Team’s Work

Over the next several months, we will be building the gesture set for the Microsoft Surface. It is our intention that this rich set of gestures will define the premium touch experience in a Microsoft product, and that this experience will be taken and scaled to other platforms in future. Once we have developed the gesture language we want to perform, we’ll adapt our best thinking on the self revealing gestures to making this rich, complex gesture set seem intuitive to the user – even if it isn’t really. Neat, huh?

[1] I will set aside a rant about how the Ribbon has attacked hotkey learning. If you happen to work on that project, however, I implore you: hotkey users are rare, but they are our power users and are our biggest proponents. In moving from office 2003 to office 2007, I went from being a power user, never having to touch the mouse, to a complete novice. Other power users have told me harrowing tales of buying their own copies of Office 2003 when their support teams stopped making it available.