The first and most immediate point to understand is that technology is not, in and of itself, a natural user interface. Certain input technologies, such as those that detect touch, speech, and even in-air gestures, might lend themselves to building a NUI. But just because those modalities are used does not mean that a NUI will result.NUI lies in the experience that is built using the technology. Not in the technology itself.

To help to make that point, I thought I’d do a quick analysis of two existing products that use direct-touch, but which in some instances miss the mark of creating a NUI.

Direct Touch Enables Direct Manipulation – but does not Guarantee It

One of the tenets of the Microsoft Surface user experience guidelines is to use direct manipulation, a form of direct input. There are three different terms that use the word ‘direct’ with respect to touch input – let’s quickly define each:

Direct Touch: a touch input device that is co-incident with a display. The opposite of direct touch is indirect touch, where the touch input device is offset from the display – like the trackpad on a laptop.

Direct Input: a particular arrangement of direct touch is to map the input device 1:1 with the display device, so that actions performed on the screen are sent to the object beneath the finger. Other arrangements are possible, but uncommon. An example of where direct input is broken is in the Hybrid Pointing system – they allow users to reach far away on a large screen without having to walk across the room.

Direct Manipulation: a particular design approach that can be used when building an interface. It is a principal which says “anything on the screen I can touch to adjust”. For example, volume is changed on a screen by reaching out and touching the current volume indicator. To move something, one simply touches it and moves their finger across the screen. Somewhat perversely, one can build a direct manipulation system without using direct touch (or touch at all) – the windows volume control is a great example of direct manipulation. For a good touch experience, however, direct manipulation is essential – users have a greater expectation of direct manipulation with direct touch than with indirect input.

To fully understand why direct manipulation is important for touch, let’s take a look at two interfaces, where they accomplish direct manipulation, and where they don’t.

Case Study of Direct Input and Direct Manipulation: Chrysler MyGig

The MyGig system is a navigation and audio device that sits in my Jeep. The interface has a lot of trouble. It should be noted that the interface is not a study in great design. But for this article, it serves as a good example.

Like most direct touch systems, the MyGig universally uses a direct-input metaphor – touches to the screen are mapped to the object beneath the finger. The primary navigation screen, shown below, has three great examples to help understand the concept of direct manipulation:

The Chrysler myGig UI. Yes, I stopped at a light when I took the photo. Call-outs below.

The first obvious example of a failure to use direct manipulation is the use of a button to do zooming (#1). On multi-touch screens, zooming is accomplished with the classic two-finger zoom gesture (a friend of mine calls this the ‘swimming’ gesture), or with multi-tapping on the map directly. #1 shows the use of a button to select the zoom level, which the user then taps to bring up another control.

#2 shows a great use of direct manipulation. There’s the clock, hanging out on the bottom-left of the screen. Want to set the time? Just reach out and touch it. I sing a song of joy every time I do this. A complete interpretation of direct manipulation might allow you to move hands on an analog clock, or drag a virtual dial behind each digit (a la the iPhone time picker). I won’t get into the mechanics of the Jeep’s time setter – suffice to say they don’t follow direct manipulation entirely. But, understand the essence of the idea here – the time is shown on the display. To change it, you touch it. Wow.

#3 shows a missed opportunity. The current radio station is shown on the screen. Given the great use of direct manipulation for the clock, one might have hoped they’d keep it up. The screen shows the current radio station. Want to change the radio station? Just reach out and touch it, right? Alas, no – tapping that readout does absolutely nothing. Following the principal of direct manipulation, touching there would provide an opportunity to change the station. We get three problems for the price of one: first, that there is missing direct manipulation on the radio station. Second, that there is obvious inconsistency in the navigation scheme – sometimes I touch the thing I want to change, other times I have to go elsewhere. The third problem is that touching the station doesn’t do anything at all. It doesn’t provide a message (“this is not how you set the station”), it doesn’t beep, it doesn’t do anything. My input gets ignored, and I get frustrated.

Case Study of Direct Input and Direct Manipulation: Cybershot DSC-TX1

I was recently given this Cybershot digital camera as a gift. Like the iPhone and the new Zune HD, this device uses touch as its input modality to enable a very large display. It’s a beautiful piece of technology – an excellent execution of industrial design. Like the MyGig, the interface has elements where direct manipulation are executed, and elements where they missed the boat.

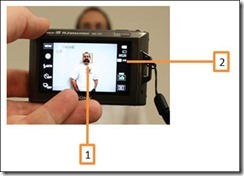

Sony’s Cybershot camera has a touch screen, implements direct input, and sometimes (but not consistently) uses direct manipulation. Call-outs below.

There are two great moments of both success and failure to implement direct manipulation on the Cybershot camera. #1 shows an amazingly awesome feature: anywhere in the field of view, the user can tap to select where to focus. A few days ago I needed to take a picture of my VIN through my windshield. The darn thing kept focusing on reflections on the window. Then I remembered this feature – I just tapped where the VIN was shown in its blurry glory – and voila! Instant focusing awesomeness.

#2 shows a failure of the same kind as the myGig. Look carefully, and you’ll see that on the display it shows both the resolution I have selected (3MP), and the aspect ratio (4:3). These are settings that can be changed. But, when I touch this display, nothing happens. Instead, I have to dig my way through menus to tell the interface which element I want to change – instead of just touching the darn pixels that are being used to show me the information. Sigh.

A NUI Design Principle: No Touch Left Behind

We at Surface had a principle which has helped us to do our design work: leave no touch behind. What that means in practice is that every touch to the screen should do something consistent with the principle of direct manipulation. We also have a visualization system that will do the bare minimum: acknowledge that the touch was seen, but that the system is not going to respond. Obviously, the ideal would be for all applications built on our platform to adhere to our principle, but the contact visualizer at least reduces the impact of a failure to do so. Check out the video:

From Touch to Voice: The “Direct” Voice Response Interface

Ultimately, the principle of direct manipulation is not limited to touch. We can apply it equally to other modalities. To understand how, consider three possible voice response systems:

a. “For sales, say “1”, for support, say ‘2’,…”

b. “Which would you like: you can say ‘sales’, ‘support’,…”

c. “How can I help you?”

Setting aside how well the technology enables each of these, consider how ‘direct’ the mapping is in each of these cases between the user’s goal and what they have to give as input. Clearly, #c is the most ‘direct’ – the user thinks about what they want, and they say it. There is no rephrasing the query in the system’s terms. #a is the least direct, since the input is a completely arbitrary mapping between the goal and the input.

As you and your teams build-out your NUI experiences, think about how the principle of direct manipulation can help you to make easier, more natural mappings between input and desired goal. Understand that this may well require you to provide on-screen affordances – don’t be shy about this. A “Natural” user interface does not mean the absence of digital information – it simply means that the mapping is one that can be quickly understood.

Final Word: Technology Ain’t NUI, and NUI Ain’t Technology

Ultimately, whether or not the experience is a ‘natural’ one is a function of the design of that experience. I believe strongly that a Natural User Interface could well be created for a mouse and keyboard. Certainly, there are modalities that lend themselves more easily to natural experiences, but without proper software design, a NUI you will not have.