In the broadest sense, touch as a technology refers to the response of an input device without having to depress an actuator. The advent of technologies which allow displays and input devices to overlap have given rise to direct touch input. With direct touch, we apply the effect of a touch to the area of the screen beneath the finger(s). This gives rise to claims of ‘more natural’ computing, and enables certain types of devices to be better (such as mobile devices, like the Zune or a Windows Mobile phone). Direct touch, however, introduces a host of problems that make it ill suited to most applications.

What’s With Direct Touch?

I recently attended a presentation in which the speaker described a brief history Apple’s interaction design. The speaker noted that the iPhone’s touch interface was developed in response to the need for better input in the phone.

Phewy.

The iPhone’s direct-touch input was born of a desire to make the screen as large as possible. By eliminating a dedicated area for input, Apple could cover the whole of the front of the device with a big, beautiful screen (don’t believe me? Watch Jobs’ keynote again). The need to constantly muck-up your screen with fingerprints is a consequence of the desire to have a large display – not a primary motivator. Once we understand this, we understand the single most important element of direct touch: it allows portable devices to have larger screens. But it also has a litany of negative points:

- Tiring for large and vertical screens (the Gorilla Arm effect)

- Requires users to occlude their content in order to interact with it (leading to the ‘fat finger problem’.

- Does not scale up well: human reach is limited

- Does not scale down well: human muscle precision is limited

There is also some evidence that direct touch allows children to use computers at a younger age, that older novice computer users can use it more quickly. Direct-touch has also demonstrated utility in kiosk applications, characterized by short interaction periods and novice users. We on the Surface team also believe that direct-touch is suitable for certain scenarios involving sustained use. Clearly, direct-touch is far from being the be-all and end-all input technique – it has a whole host of problems that make it ill suited to a number of scenarios.

What’s with Indirect Touch?

Indirect touch overcomes most of the negatives of direct touch.

- Scalable both up and down with C/D Gain (aka ‘mouse speed’ in windows – ‘touch speed’ in Windows 8?)

- Enables engaging interactions in the same way direct-touch does

- Fingers do not occlude the display when using

- Not tiring if done correctly: with the right scale factor (C/D gain) a large area can be affected with small movements

Revolutionary Indirect Touch

Indirect touch enables a host of scenarios not possible with direct touch, many of which are much more compelling. Our first example <REDACTED FROM PUBLIC VERSION OF THE BLOG. MSFT EMPLOYEES CAN VIEW THE INTERNAL VERSION>

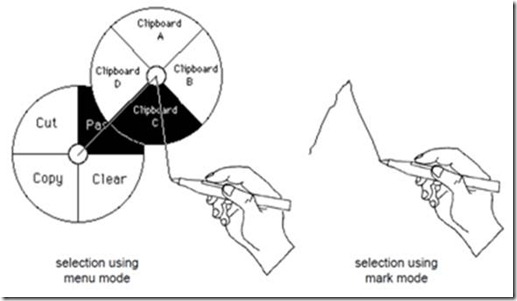

While exciting and low-tech, it should be noted that this demo is ‘single touch’ (not multi-touch). So, what would indirect multi-touch look like? Let’s take a look at three examples. The first is again from a teaming of MS Hardware and MSR: Mouse 2.0. Mouse 2.0 is a multi-touch mouse that enables multi-touch gestures on a mouse. Right around the time this research paper was published at ACM UIST, Apple announced their multi-touch mouse. While both are compelling, Mouse 2.0 offers several innovations that the Apple mouse lacks. Note the mixing of two different forms of indirect interaction: the mouse pointer and ‘touch pointer’:

Figure 2: Mouse 2.0 adds multi-touch capabilities to the world’s second most ubiquitous computer input device.

Indirect touch is also highly relevant for very large displays. In this system, a user sits at a table in front of a 20’ high-resolution display. Instead of having to walk across the room to use the display, the user sits and performs indirect touch gestures. The left hand positions the control area on the display, and the right hand performs touch gestures within that area. This demonstrates how indirect touch is far more scalable than direct touch, and also shows a good way to use two hands together:

Figure 3: Two-handed indirect touch interaction with very large displays.

Also for large displays: this time, televisions. An indirect touch project from Panasonic shows how we can get rid of the mass of buttons on a remote control: display only the buttons that you need at the moment. To allow this to be done cheaply, they built a remote control that has only two touch sensors (with actuators, so that you can push down to select). The visual feedback is shown on the screen:

Figure 4: Panasonic’s prototype remote control has no buttons: the interface is shown on the TV screen, customized for the current context of use.

Finally, the TactaPad, which demonstrates how indirect multi-touch interaction could be done to enable existing applications without the many drawbacks of direct touch. The TactaPad uses vision and a touch pad to create a device that can detect the position of the hands while in the air, and send events to targeted applications when the user touches the pad. While less subtle, this starts to show how a generic indirect multi-touch peripheral might behave in an existing suite of applications:

Figure 5: the TactaPad provides an indirect touch experience to existing applications.

From this grab-bag of indirect touch projects, we can start to see a pattern emerge: direct touch is appropriate for some applications, but is absolutely the wrong tool for others. Indirect touch fills the many voids left by direct touch. Indirect touch interaction allows users to operate at a distance, to scale their interactions, and to work in a less tiring way. If touch is the future of interaction, indirect touch is the way it will be achieved. Apple’s trackpad features a set of indirect touch gestures that their customers use every day to interact with their macbook. My girlfriend recently turned down a bootcamp install of Windows 7 because it doesn’t support her trackpad gestures:

These gestures are usable (natural? No such thing) and definitely useful. As always, the key to success is a real understanding of user needs and the fundamentals of available technologies. From there, we can begin to design software and hardware solutions to those needs in a way that provides an exciting, compelling, and truly useful experience.