My commitments include driving towards a standardized gesture language across Microsoft. Given this, someone asked me recently: why are the gestures across our products not the same, and why are you designing new gestures for Surface that aren’t the same as those in Windows?

The answer is in two parts. The first is to point out that manipulations, a specific type of gesture, are standardized across the company, or in the process of being standardized. The manipulation processor is a control in a bunch of different platforms, including both Microsoft Surface and Windows 7. The differences lie in non-manipulation gestures (what I have previously called ‘system gestures’). So – why are we developing different non-manipulation gestures than are included in Win 7?

Recall: Anatomy of a Gesture

Recall from a previous post that gestures are made-up of three parts: registration, continuation, and termination. For the engineers in the audience, a gesture can be thought of as a function call: the user selects the function at the registration phase, they specify the parameters of the function during the continuation phase, and the function is executed at the termination phase:

Figure 1: stages of a gesture: registration (function selection), continuation (parameter specification), termination (execution)

To draw from an example you should all now be familiar with, consider the two-finger diverge (‘pinch’) in the manipulation processor – see the table below. I put this into a table in the hopes you will copy and paste it, and use it to classify your own gestures in terms of registration, continuation, and termination actions.

| Gesture Name | Logical Action | Registration | Continuation | Termination |

| ManipulationProcessor/Pinch | Rotates, resizes, and translates (moves) an object. | Place two fingers on a piece of content. | Move the fingers around on the surface of the device: the changes in the length, center position, and orientation of the line segment connecting these points is applied 1:1 to each of scale (both height and width), center position, and orientation of the content. | Lift the fingers from the surface of the device. |

Table 1: stages of multi-touch gestures.

Avoiding Ambiguity

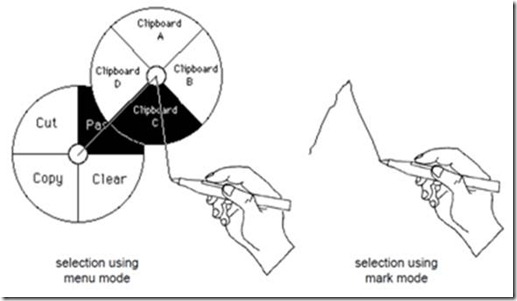

The design of the manipulation processor is ‘clean’, in a particularly nice way. To understand this, consider our old friend the Marking Menu. For those not familiar, think of it as a gesture system in which the user flicks their pen/finger in a particular direction to execute a command (there is a variation of this in Win 7, minus the menu visual):

Figure 2: Marking menus

Keeping this in mind, let’s examine the anatomy of a theoretical ‘delete’ gesture:

| Gesture Name | Logical Action | Registration | Continuation | Termination |

| Theoretical/Delete | Delete a file or element. | 1. Place a finger on an item 2. Flick to the left | None. | Lift the finger from the surface of the device. |

Table 2: stages of the WM_Gesture/Delete function.

Two things are immediately apparent. The first is that there is no continuation phase of this gesture. This isn’t surprising, since the delete command has no parameters – there isn’t more than one possible way to ‘delete’ something. The second striking thing is that the registration requires two steps. First, the user places their hand on an element, then they flick to the left.

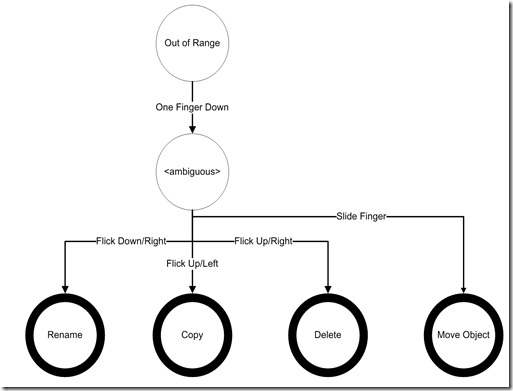

Requiring two steps to register a gesture is problematic. First, it increases the probability of an error, since the user must remember multiple steps. Second, error probability is also increased if the second step has too small a space relative to other gestures (eg: if there are more than 8 options in a marking menu). Third, it requires an explicit mechanism to transition between registration and continuation phases: if flick-right is ‘resize’, how does the user then specify the size? Either it’s a separate gesture, requiring a modal interface, or the user will keep their hand on the screen, and require a mechanism to say ‘I am now done registering, I would like to start the continuation phase’). Last, the system cannot respond to the user’s gesture in a meaningful way until the registration step is complete, and so this prolongs this feedback. Let’s consider a system which implements just 4 gestures: one for manipulation of an object (grab and move it), along with 3 for system actions (‘rename’, ‘copy’, ‘delete’) using flick gestures. We can see the flow of a user’s contact in Figure 3. When the user first puts down their finger, the system doesn’t know which of these 5 gestures the user will be doing, so it’s in the state labelled ‘<ambiguous>’. Once the user starts to move their finger around the table in a particular speed and direction (‘flick left’ vs. ‘flick right’) or pattern (‘slide’ vs. ‘question mark’), the system can resolve that ambiguity, and the gesture moves into the registration phase:

Figure 3: States of a hand gesture, up to and including the end of the Registration phase – continuation and termination phases are not shown.

| Gesture Name | Logical Action | Registration | Continuation | Termination |

| Theoretical/Rename | Enter the system into “rename” mode (the user then types the new name with the keyboard). | 1. Place a finger on an item 2. Flick the finger down and to the right | None. | Lift the finger from the surface of the device. |

| Theoretical/Copy | Create a copy of a file or object, immediately adjacent to the original. | 1. Place a finger on an item 2. Flick the finger up and to the left | None. | Lift the finger from the surface of the device. |

| Theoretical/Delete | Delete a file or element. | 1. Place a finger on an item 2. Flick the finger up and to the right | None. | Lift the finger from the surface of the device. |

| ManipulationProcessor/Move | Change the visual position of an object within its container. | 1. Place a finger on an item 2. Move the finger slowly enough to not register as a flick. | Move the finger around the surface of the device. Changes in the position of the finger are applied 1:1 as changes to the position of the object. | Lift the finger from the surface of the device. |

Table 3: stages of various theoretical gestures, plus the manipulation processor’s 1-finger move gesture.

Consequences of Ambiguity

Let’s look first at how the system classifies the gestures: if the finger moves fast enough, it is a ‘flick’, and the system goes into ‘rename’, ‘copy’, or ‘delete’ mode based on the direction. Consider now what happens for the few frames of input while the system is testing to see if the user is executing a flick. Since it doesn’t yet know that the user is not intending to simply move the object quickly, there is ambiguity with the ‘move object’ gesture. The simplest approach is for the system to assume each gesture is a ‘move’ until it knows better. Consider the interaction sequence below:

| 1 | 2 | 3 |

Figure 4: Interaction of a ‘rename’ gesture: 1: User places finger on the object. 2: the user has slid their finger,

with the object following-along. 3: ‘rename’ gesture has registered, so the object pops back to its original location

Because, for the first few frames, the user’s intention is unclear, the system designers have a choice. Figure 4 represents one option: assume that the ‘move’ gesture is being performed until another gesture gets registered after analyzing a few frames of input. This is good, because the user gets immediate feedback. It’s bad, however, because the feedback is wrong: we are showing the ‘move’ gesture’, but they’re intending to perform a ‘rename’ flick. The system has to undo the ‘move’ at the time of registration of rename, and we get an ugly, popping effect. This problem could be avoided: provide no response until the user’s action is clear. This would correct the bad feedback in the ‘rename’ case, but consider the consequence for the ‘move’ case:

| 1 | 2 | 3

|

Figure 4: Interaction of a ‘move’ gesture in a thresholded system: 1: User places finger on an object. 2: slides finger along surface.

The object does not move because the ‘flick’ threshold is known to have not been met. 3: object jumps to catch-up to the user’s finger.

Obviously, this too is a problem: the system does not provide the user with any feedback at all until it is certain that they are not performing a flick.

The goal, ultimately, is to avoid the time that the user’s intention is ambiguous. Aside from all of the reasons outlined above, it also creates a bad feedback situation. The user has put their finger down, and it has started moving – how soon does the recognizer click over to “delete” mode, vs. waiting to give the user a chance to do something else? The sooner it clicks, the more likely there will be errors. The later it clicks, the more likely the user will get ambiguous feedback. It’s just a bad situation all around. The solution is to tie the registration event to the finger-down event: as soon as the hand comes down on the display, the gesture is registered. The movement of the contacts on the display is used only for the continuation phase of the gesture (ie specifying the parameter).

Hardware Dictates What’s Possible

Obviously, the Windows team are a bunch of smart cats (at least one of them did his undergrad work at U of T, so you know it’s got to be true). So, why is it that several of the gestures in Windows 7 are ambiguous at the moment of contact-down? Well, as your mom used to tell you, ‘it’s all about the hardware, stupid’. The Windows 7 OEM spec requires that hardware manufacturers only sense two simultaneous points of contact. Further, the only things we know about those points of contact are their x/y position on the screen (see my previous article on ‘full touch vs. multi-touch’ to understand the implications). So, aside from the location of the contact, there is only 1 bit of information about the gesture at the time of contact down – whether there are 1 or 2 contacts on the display. With a message space of only 1 bit, it’s pretty darn hard to have a large vocabulary. Of course, some hardware built for Win7 will be more enabled, but designing for all means optimizing for the least.

In contrast, the next version of Microsoft Surface is aiming to track 52 points of contact. For a single hand, that means we have 3 bits of data about the number of contacts. Even better, we will have the full shape information about the hand – rather than using different directions of flick to specify delete vs. copy, the user simply pounds on the table with their closed fist to delete, and full-hand diverge to copy (as opposed to two-point diverge which is “pinch”, as described above). It is critical to our plan that we fully leverage the Microsoft Surface hardware to enable a set of gestures with fewer errors and a better overall user experience.

How will we Ensure A Common User Experience?

Everyone wants a user experience that is both optimal and consistent across devices. To achieve this, we are looking to build a system that will support both the Win 7 non-manipulation gesture language, as well as our shape-enabled system to navigate. The languages will co-exist, and we will utilize self-revealing gesture techniques to transition users to the more reliable Surface system. Further, we will ensure that the surface equivalents are logically consistent: users will learn a couple of rules, and then simply apply those rules to remember how to translate Win 7 gestures into Surface gestures.

Our Team's Work

Right now, we are in the process of building our gesture language. Keep an eye on this space to see where we land.