Recently, a PM on my team asked me this question – he was working with a group of people trying to make an application for Microsoft Surface, who had designed their application, and suddenly realized that they had assigned reactions for ‘MouseOver’ that could now not be mapped to anything, since Surface does not have a ‘hover’ event.

Boy, was this the wrong question!

What we have here is a fundamental misunderstanding of how to do design of an application for touch. It’s not his fault – students trained in design, cs, or engineering build applications against the mouse model of interaction so often, they become engrained assumptions about the universe in which design is done. We have found this time and again when trying to hire onto the Surface team: a designer will present an excellent portfolio, often with walkthroughs of webpages or applications. These walkthroughs are invariably constructed as a series of screen states – and what transitions between them? Yep – a mouse click. The ‘click’ is so fundamental, it doesn’t even form a part of the storyboard – it’s simply assumed as the transition.

As we start to build touch applications, we need to start to teach lower-level understanding of interaction models and paradigms, so that the design tenets no longer include baseline assumptions about interaction, such as the ‘click’ as transition between states.

Mouse States and Transitions vs. Touch States & Transitions

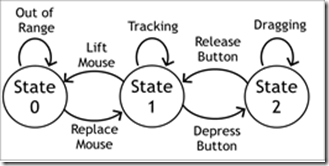

Quite a few years ago, a professor from my group at U of T (now a principal researcher at MSR), published a paper describing the states of mouse interaction. Here’s his classic state diagram, re-imagined a little with different terminology to make it more accessible:

Figure 1. Buxton’s 3-state model of 1-button mouse hardware

This model describes the three states of the mouse. State 0 is when the mouse in the air (not tracking – sometimes called ‘clutching’, often done by users when they reach the edge of the mouse pad). State 1 is when the mouse is moving around the table, and thus the pointer is moving around the screen. This is sometimes called “mouse hover”. State 2 is when a mouse button has been depressed. Technically, it’s possible to transition directly between states 0 and 2 (by pressing a button when the mouse is in the air).

As Bill often like’s to point out, the transitions are just as, if not more important than the states themselves. It is the transitions between states that usually evoke events in a GUI system: transition from state 1 to 2 fires the “button down” event in most systems. 2-1 is “button up”. A 1 à 2 à 1 transition is a “click”, if done with no movement and in a short period off time.

Aside for the real geeks: the model shown in Figure 1 is a simplification: it does not differentiate between different mouse buttons. Also, it is technically possible to transition directly between states 0 and 2 by pushing the mouse button while the mouse is being held in the air. These and many other issues are addressed in the paper – go read it for the details, there’s some real gold.

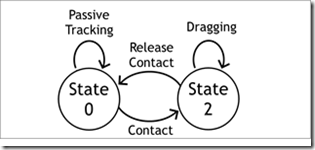

The problem the PM was confronting becomes obvious when we look at the state diagram for a touch system. Most touch systems have a similar, but different state diagram:

Figure 2. Buxton’s 2-state model of touch input: state names describe similar states from the mouse model, above.

As we can see, most touch systems have only 2-states: State 0, where the finger is not sensed, and State 2, where the finger is now on the display. The lack of a State 1 is highly troubling: just think about all of the stuff that GUI’s do in State 1: the mouse pointer gives a preview of where selection will happen, making them more precise. Tooltips are shown in this state, as are highlights to show the area of effect when a mouse is clicked. I like to call these visuals “pre-visualizations”, or visual previews of what the effect of pushing the mouse button will be.

We saw in my article on the Fat Finger Problem that the need for these pre-visualizations is even more pronounced on touch systems, where precision of selection is quite difficult.

Aside for the real geeks: the model of a touch system described in Figure 2 is also a simplification: it is really describing a single-touch device. To apply this to multi-touch, think of these as states of each finger, rather than of the hardware.

There are touch systems which have a ‘hover’ state, which sense the location of fingers and/or objects before they touch the device. I’ll talk more about the differences in touch experiences across hardware devices in a future blog post.

Touch and Mouse Emulation: Doing Better than the Worst (aka: Why the Web will Always Suck on a Touch Screen)

We’ll start to see a real problem as there are increasingly two classes of devices browsing the web at the same time: touch, and mouse. Webpage designers are often liberal in their assumptions of the availability of a ‘mouse over’ event, and there are examples of websites that can’t be navigated without it (Netflix.com leaps to mind, but there are others). It is in these hybrid places that we’ll see the most problems.

When I taught this recently to a class of undergrads, one asked a great question: the trackpad on her laptop also had only two states, so why is this a problem for direct-touch, but not for the trackpad? The answer lies in understanding how the events are mapped between the hardware and the software. In the case of a trackpad, it emulates a 3-state input device in software: the transitions between states 1 and 2 are managed entirely by the OS. It can be done with a physical button beside the trackpad (common), or operated by a gesture performed on the pad (‘tapping’ the pad is the most common).

In a direct-touch system, this is harder to do, but not impossible. The trick lies in being creative in how states of the various touchpoints are mapped onto mouse states in software. The naïve approach is to simply overlay the touch model atop the mouse one. This model is the most ‘direct’, because system events will continue to happen immediately beneath the finger. It is not the best, however, because it omits state 1 and is imprecise.

The DT Mouse project from Mitsubishi Electric Research Labs is the best example of a good mapping between physical contact and virtual mouse states. DT Mouse was developed over the course of several years, and was entirely user-centrically designed, with tweaks done in real time. It is highly tuned, and includes many features. The most basic is that it has the ability to emulate a hover state – this is done by putting two fingers down on the screen. When this is done, the pointer is put into state 1, and positioned between the fingers. The transition from state 1 to 2 is done by tapping a third finger on the screen. An advanced user does this by putting down her thumb and middle finger, and then ‘tapping’ with the index finger:

Figure 3. Left: The pointer is displayed between the middle finger and thumb.

Right: the state 1à transition is simulated when the index finger is touched to the display

So, now we see there are sophisticated ways of doing mouse emulation with touch. But, this has to lead you to ask the question: if all I’m using touch for is mouse emulation, why not just use the mouse?

Design for Touch, Not Mouse Emulation

As we now see, my PM friend was making a common mistake: he started by designing for the mouse. In order to be successful, designers of systems for multi-touch applications should start by applying rules about touch, and assigning state changes to those events that are easily generated using a touch system.

States and transitions in a touch system include the contact state information I have shown above. In a multi-touch system, we can start to think about combining the state and location of multiple contacts, and mapping events onto those. These are most commonly referred to as gestures – we’ll talk more about them in a future blog post.

For now, remember the lessons of this post:

- a. Re-train your brain (and the brains of those around you) to work right with touch systems. It’s a 2-state system, not a 3-state one.

- b. Mouse emulation is a necessary evil of touch, but definitely not the basis for a good touch experience. Design for touch first!

Our Team’s Work

The Surface team is tackling this in two ways: first, we’re designing a mouse emulation scheme that will take full advantage of our hardware (the Win 7 effort, while yielding great results, is based on more limited hardware). Second, we are developing an all-new set of ‘interaction primitives’, which are driven by touch input. This means the end of ‘mouse down’, ‘mouse over’, etc. They will be replaced with a set of postures, gestures, and manipulations designed entirely for touch, and mapped onto events that applications can respond to.

The first example of this is our ‘manipulation processor’, which maps points of contact onto 2-dimensional spatial manipulation of objects. This processor has since been rolled out into Win 7, windows mobile, and soon into both .NET and WPF. Look for many more such contributions to NUI from our team moving forward.

No comments:

Post a Comment