This entry is meant to address a myth that we encounter time and again: the Myth of the Natural Gesture.

The term “Natural User Interface” evokes mimicry of the real world. A naïve designer looks only to the physical world, and copies it, in the hopes that this will create a natural user interface. Paradoxically to some, mimicking the physical world will not yield an interface that feels natural. Consider two examples to illustrate the point.

First, consider the GUI, or graphical user interface. Our conception of the GUI is based on the WIMP toolset (windows, icons, menus, and pointers). WIMP is actually based on a principal of ‘manipulation’, or physical movement of objects according to naïve physics. The mouse pointer is a disembodied finger, poking, prodding, and dragging content around the display. In short, if ‘natural’ means mimicking nature, we did that already – and it got us to the GUI.

Second, consider the development of the first generation Microsoft Surface gestures. The promise of the NUI is an interface that is immediately intuitive, with minimum effort to operate. In designing Surface gestures, we once constantly asked: “what is natural to the user?” This question is a proxy for “what gesture would the user likely perform at this point to accomplish this task?” In both cases, this is the wrong question. Here’s why:

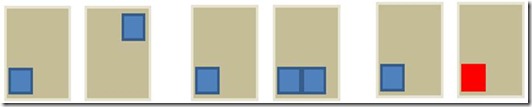

Imagine an experiment intended to elicit the ‘natural’ gesture set – that set of gestures users would perform without any prompting. You might design it like this: show the user screen shots of your system before and after the gesture is performed. Then, ask them to perform the gesture that they believe would cause that transition. Here’s an example:

In two experiments conducted by teams at both Microsoft Surface and MSR, there was found almost no congruence between any user-defined gestures for even the simplest of operations (MSR work).

A solution to this lies in the realization that both studies were intentionally done free of context: no on-screen graphics to induce a particular behaviour. Imagine trying to use a slider from a GUI without a thumb painted on the screen to show you the level, induce behaviour (drag), and give feedback during the operation so you know when to stop. As we develop NUI systems, they too will need to provide different affordances and graphics.

So, if you find yourself trying to define a gesture set for some platform or experience, you should consider the universe you have built for your user – what do the on-screen graphics afford them to do? User response is incredibly contextual. Even in the real world, a ‘pick up’ gesture is different for the same object when it has been super-heated. If there is not an obvious gesture in the context you have created, it means the rules and world you have built is incomplete: expand it. But don’t try to fall back to some other world (real or imagined).

While the physical world and previous user experience provides a tempting target, a built-world with understood rules that will elicit likely responses, relying on that alone in building a user interface will be disastrous – because only one gesture is actually ‘natural’. Relying on some mythical ‘natural’ gesture will only yield frustration for your users.

No comments:

Post a Comment